In today’s cloud-native and microservices-driven world, delivering fast, secure, and highly available web applications is no longer optional — it’s expected. Two critical components that make this possible are Load Balancers and Reverse Proxy.

Although these terms are often used interchangeably, they solve different problems and offer different benefits. Understanding how they work — individually and together — can help you design a more scalable, reliable, and secure architecture.

In this article, we’ll explore:

- What is a load balancer?

- What is a reverse proxy?

- Load balancer vs reverse proxy (differences)

- Why you need both

- Real-world use cases

- How they improve performance, scalability, and security

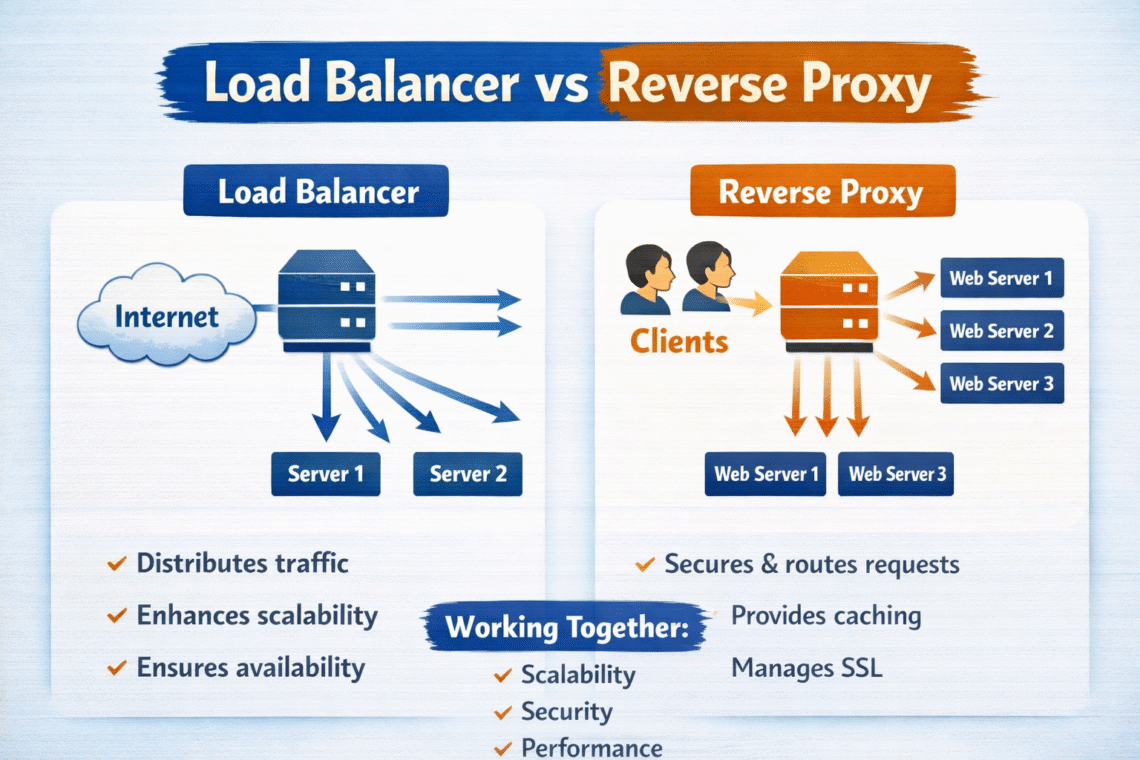

What is a Load Balancer?

A load balancer is a system that distributes incoming traffic across multiple backend servers to ensure no single server becomes overwhelmed.

Key Benefits:

- Improves application availability

- Enhances fault tolerance

- Increases horizontal scalability

- Prevents server overload

How it works:

When a user sends a request, the load balancer selects the most appropriate backend server using algorithms like:

- Round Robin

- Least Connections

- IP Hashing

Common Use Cases:

- High-traffic websites

- SaaS platforms

- E-commerce systems

- Streaming platforms

Example tools: NGINX Load Balancer, AWS Elastic Load Balancer, HAProxy, Google Cloud Load Balancing

What is a Reverse Proxy?

A reverse proxy sits between the client and the backend servers and acts as a gateway that forwards client requests to the appropriate service.

Unlike a load balancer, a reverse proxy focuses on:

- Security

- Caching

- SSL termination

- Routing

- Authentication

Key Benefits:

- Hides internal servers from the internet

- Enables Web Application Firewall (WAF)

- Improves performance with caching

- Manages SSL certificates

- Enables API routing and microservice management

Common Use Cases:

- Protecting backend services

- Serving cached content

- Enforcing authentication

- Managing microservices

Example tools: NGINX Reverse Proxy, Traefik, Cloudflare, Envoy

Why Do You Need Both?

While load balancers distribute traffic, reverse proxy manage and protect it.

They complement each other:

- Load balancer ensures availability

- Reverse proxy ensures security and control

Together they provide:

- Better performance

- Higher uptime

- Stronger security

- Improved user experience

How They Work Together (Architecture Flow)

Flow Example:

User Request

↓

Reverse Proxy (Security, SSL, Caching)

↓

Load Balancer (Traffic Distribution)

↓

Backend Application Servers

- The reverse proxy handles authentication, SSL termination, caching, and filtering.

- The load balancer then distributes traffic evenly to healthy backend servers.

Load Balancer vs Reverse Proxy — Key Differences

| Feature | Load Balancer | Reverse Proxy |

|---|---|---|

| Main purpose | Distribute traffic | Secure and manage traffic |

| Improves scalability | Yes | Indirectly |

| Improves security | Limited | Strong |

| Supports caching | No | Yes |

| Hides backend servers | No | Yes |

| SSL termination | Sometimes | Yes |

Why They Are Helpful

Load Balancer Helps By:

- Preventing server overload

- Handling traffic spikes

- Ensuring high availability

Reverse Proxy Helps By:

- Protecting backend servers

- Reducing latency with caching

- Managing API gateways and microservices

- Enforcing security policies

Final Thoughts

The debate of load balancer vs reverse proxy isn’t about choosing one — it’s about understanding where each fits in your architecture.

- Use a load balancer for performance and scalability.

- Use a reverse proxy for security, routing, and optimization.

- Use both together to build robust, production-grade systems.

If you’re building modern web applications, SaaS platforms, or cloud-native services — combining a reverse proxy with a load balancer is one of the best architectural decisions you can make.